As AI advances, the focus has shifted from data volume to data quality and expert alignment. Early LLMs relied on massive, unfiltered datasets, which led to diminishing returns, bias propagation, and costly alignment issues. Today, curated, domain-specific datasets drive superior outcomes—something we also explored in detail in our guide on optimizing LLM training data in 2026

As AI advances, the focus has shifted from data volume to data quality and expert alignment. Early LLMs relied on massive, unfiltered datasets, which led to diminishing returns, bias propagation, and costly alignment issues. Today, curated, domain-specific datasets drive superior outcomes—something we also explored in detail in our guide on optimizing LLM training data in 2026

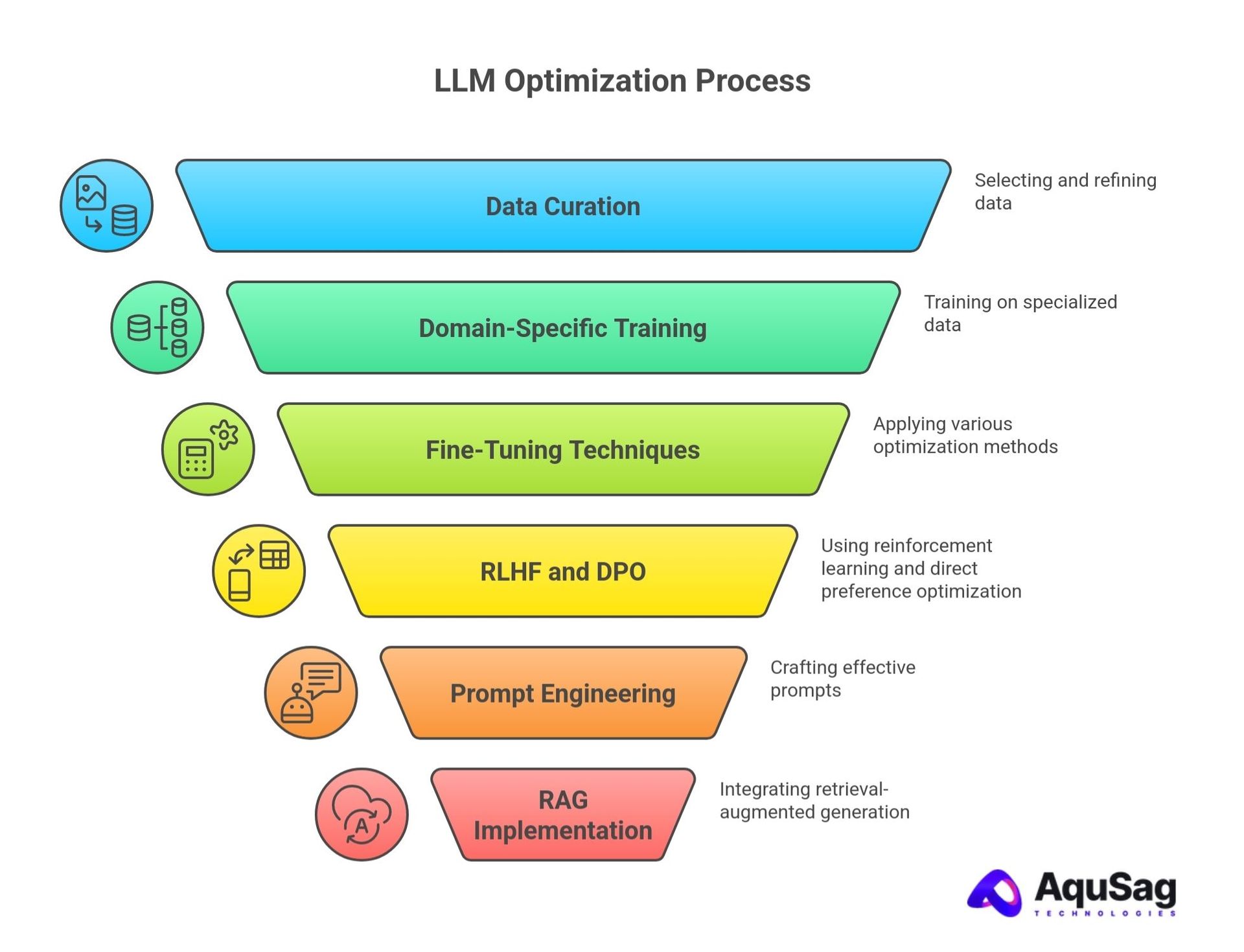

At Aqusag.com, we provide specialized LLM trainer services across agentic workflows, finance, psychology, and multilingual domains. This article examines essential techniques—supervised fine-tuning, instruction tuning, RLHF, DPO, prompt engineering, RAG, and red teaming—that transform foundation models into production-ready solutions.

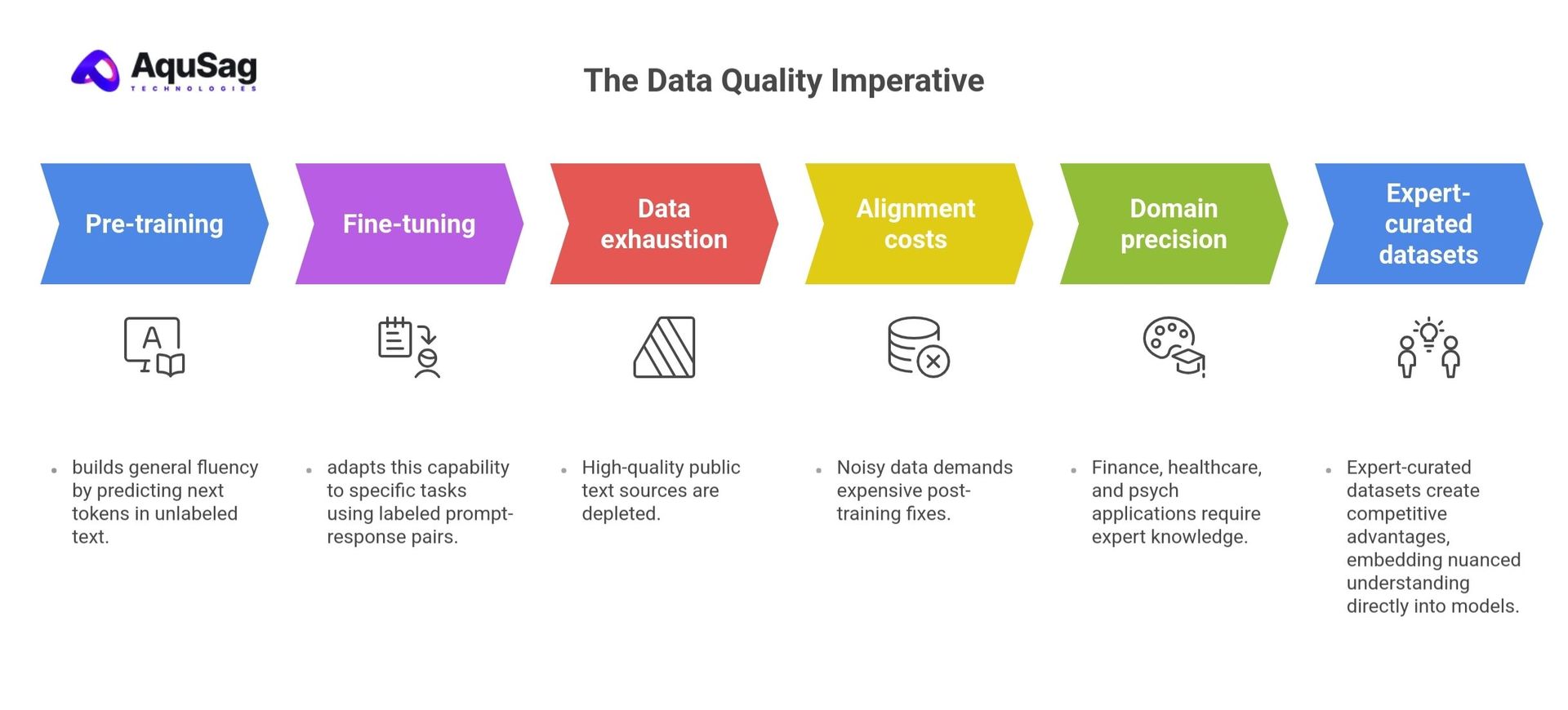

The Data Quality Imperative

Pre-training builds general fluency by predicting next tokens in unlabeled text. Fine-tuning adapts this capability to specific tasks using labeled prompt-response pairs.

Why quality matters:

- Data exhaustion: High-quality public text sources are depleted

- Alignment costs: Noisy data demands expensive post-training fixes

- Domain precision: Finance, healthcare, and psych applications require expert knowledge

This is why many enterprises now partner with external AI workforce specialists rather than building everything in-house—a decision framework we outline in what an AI workforce partner should actually deliver

Core Fine-Tuning Techniques

Supervised Fine-Tuning (SFT)

Adapts pre-trained models to task-specific datasets:

Pre-training: "The sun rises in the..." → "east"

Fine-tuning: "Travel from NY to Singapore" → "Flight routes, visas, layovers"

Applications include financial analysis, legal review, diagnostics, and domain chatbots. Scaling SFT efficiently often requires dedicated trainer pools—a challenge addressed in how high-growth AI companies scale LLM teams without expanding internal headcount

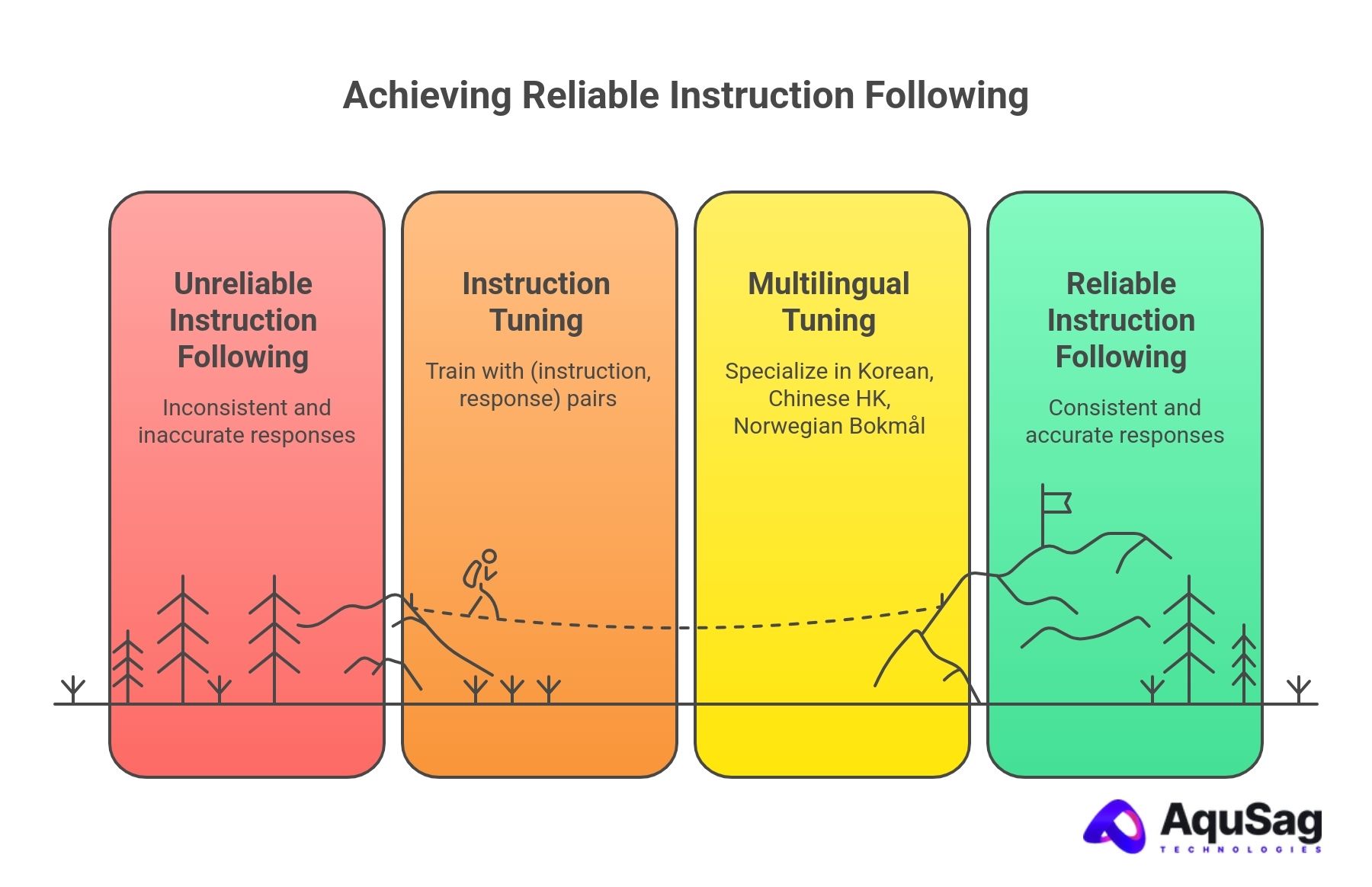

Instruction Tuning

Teaches reliable instruction-following using (instruction, response) pairs:

- Summarization: "Summarize this EHR record"

- Translation: "Translate to Italian"

- Formatting: Structured financial reports

Aqusag.com specialty: Multilingual instruction tuning (Korean, Chinese HK, Norwegian Bokmål)

Advanced Alignment Methods

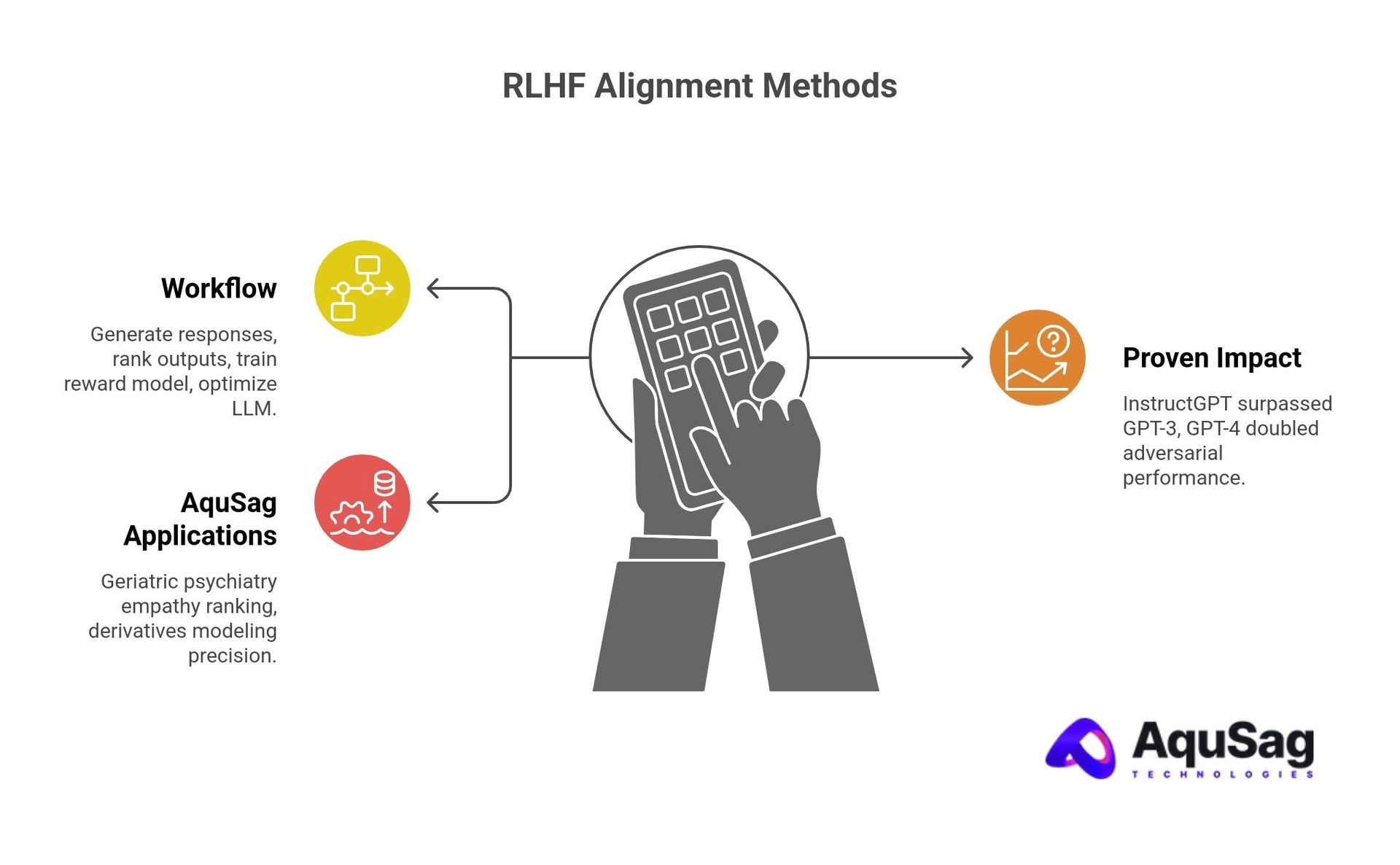

RLHF: Reinforcement Learning from Human Feedback

Aligns models with complex human preferences:

Workflow:

- Generate multiple responses per prompt

- Expert annotators rank outputs (best → worst)

- Train reward model on rankings

- Optimize LLM via reinforcement learning (PPO)

Its operational complexity often pushes companies to seek external delivery models, as detailed in how enterprises build RLHF and GenAI pipelines without specialized internal teams

For a deeper technical breakdown, see The complete guide to RLHF for modern LLMs.

DPO: Direct Preference Optimization

Streamlined RLHF alternative using preference pairs (prompt, preferred, rejected):

Advantages:

- No reward model training

- Stable optimization

- Compute-efficient

- Matches RLHF performance

Ideal for rapid iteration across our 20+ finance and psych trainer specializations.

Lightweight Optimization Strategies

Prompt Engineering

Shapes outputs without retraining:

Technique |

Use Case |

Example |

|---|---|---|

Zero-shot |

Simple classification |

"Analyze sentiment" |

Few-shot |

Pattern matching |

2-3 input/output examples |

Chain-of-Thought |

Complex reasoning |

"Think step-by-step" |

RAG: Retrieval-Augmented Generation

RAG vs Fine-tuning:

RAG |

Fine-tuning |

|

|---|---|---|

Knowledge |

Dynamic retrieval |

Static training data |

Cost |

Query-time |

Full retraini |

Use case |

Proprietary data |

Deep specialization |

Safety & Reliability: Red Teaming

Production LLMs must withstand adversarial attacks:

Attack vectors:

- Prompt injection: "Ignore safety rules"

- Jailbreaking: Role-play bypasses

- Bias amplification: Embedded training data flaws

Red teaming workflow:

- Craft adversarial prompts

- Document failures

- Generate corrective training data

- Realign model

Aqusag.com red team: 24 domain-specialized teams probing finance, psych, and agentic vulnerabilities.

Aqusag.com's Production LLM Services

Expertise across 30+ domains:

Finance ($80-200/hr):

• Debt Capital Markets • Derivatives & Quant

• Project Finance • Structured Finance

• Energy & Renewables • Healthcare Finance

Psychology ($100-200/hr)

• Geriatric Psychiatry • Forensic Psychology

• Sexual Health • Well-being Specialists

Technical:

• Agent Function Calling • ServiceNow Integration

• Pascal/Delphi • Multilingual (7 languages)

Complete workflow:

- Custom dataset curation

- RLHF preference ranking

- Hallucination detection

- Instruction design

- Model benchmarking

- Red teaming & safety

2026 Performance Benchmarks

Technique | Performance Gain | Cost Reduction --------------------|------------------|--------------- Expert Data Curation| 25-35% accuracy | - [attached_file:1] RLHF Alignment | 2x adversarial | - [attached_file:1] DPO Optimization | RLHF equivalent | 40% compute [attached_file:1] RAG Implementation | Real-time facts | 80% vs retrain [attached_file:1] Red Teaming | Safety +15% | - [attached_file:1]

Implementation Roadmap

Phase 1: Foundation

1. Select base model (DataCamp/Zapier rankings)

2. Curate domain dataset (100-10K examples)

3. SFT + instruction tuning

Phase 2: Alignment

4. RLHF/DPO preference loops

5. Prompt engineering optimization

6. RAG pipeline integration

Phase 3: Production

7. Red teaming & safety audit

8. Benchmarking suite

9. Deployment monitoring

10. Continuous iteration

Conclusion

The LLM era has matured beyond scale. Quality data + expert alignment = production success. Aqusag.com delivers complete training pipelines—from agentic function calling to specialized finance and psychology expertise.

Contact our specialists to optimize your LLM development.