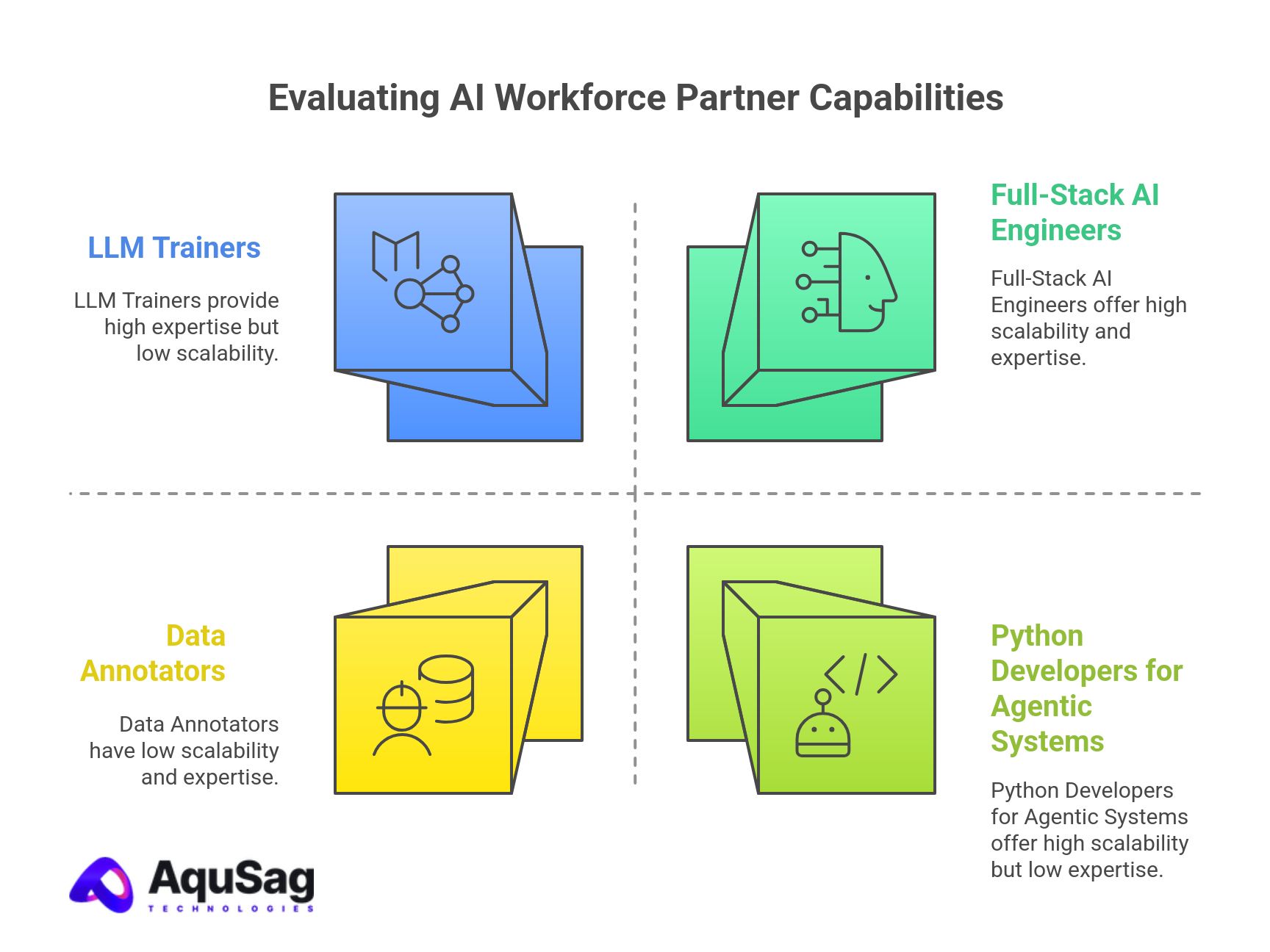

The rise of large language models has created a new category of operational demands for fast-growing AI companies. These demands are very different from traditional software staffing needs. Companies are no longer looking only for engineers; they are looking for LLM Trainers, RLHF Contributors, Evaluation Specialists, Python Developers for agentic systems, Full-Stack AI Engineers, Data Annotators, Model Validation Contributors, and domain-specific reviewers who work within highly structured workflows.

As workloads surge and evolve unpredictably, internal teams quickly reach their limits. Hiring full-time employees for every role is not practical, and using conventional staffing vendors becomes equally insufficient. Modern AI companies need a partner capable of supplying trained, scalable, multi-role AI workforces with predictable quality, rapid deployment speed, and structured governance.

But despite the urgency, many companies struggle to evaluate AI workforce partners correctly. What does a reliable partner actually do behind the scenes? What processes must be in place for a partner to support large-scale LLM training, RLHF workflows, evaluation cycles, or high-volume annotation projects?

This article explores these questions in detail. By the end, companies will understand not only what to look for in an AI workforce partner, but also how to assess readiness, avoid mismatches, and select a partner capable of supporting complex AI programs from day one.

As organizations transition from AI experimentation to full-scale production, the ability to scale teams efficiently becomes a decisive factor. High-growth AI companies often face pressure to expand LLM capabilities rapidly while avoiding long hiring cycles and rising internal costs. This challenge is explored in depth in How Do High-Growth AI Companies Build and Scale LLM Teams Fast Without Expanding Internal Headcount?, which outlines scalable workforce models that preserve speed, quality, and operational control.

Why has choosing an AI workforce partner become a strategic decision?

AI companies today operate in an environment where model behavior, dataset requirements, and experimentation velocity change weekly. Teams must adjust quickly to new instructions, new scoring methodologies, new model versions, and new layers of evaluation.

Traditional staffing approaches cannot support this fluidity. AI programs are not linear. They involve intense spikes in volume when new model versions are trained, followed by quieter periods, followed by entirely new workloads. A static hiring approach or a slow-moving vendor becomes a liability.

A strong AI workforce partner ensures that scaling no longer depends on internal headcount or long recruitment cycles. It allows companies to begin new workflows immediately, deploy trained teams within days, and adjust workforce composition in real time,whether that means adding 50 LLM Trainers, shifting from annotation to evaluation, or activating Python developers to build supporting infrastructure.

The strategic value comes from the partner’s ability to enable speed, agility, and continuity in environments where internal structures struggle to keep up.

What core capabilities should an AI workforce partner demonstrate?

A workforce partner for AI should not simply be a source of talent. It must be a delivery engine. This engine is powered by training pipelines, role-specific readiness, domain understanding, governance controls, bench availability, and the ability to maintain continuity across long-term projects.

Companies working on LLMs, RLHF reinforcement loops, evaluation frameworks, and agentic workflows need partners capable of supplying roles such as:

- LLM Trainers and Instruction Specialists

- RLHF Annotators and Reinforcement Contributors

- Evaluation and Model Scoring Teams

- Python Developers for AI Services

- Backend and Full-Stack Engineers for AI Applications

- Data Engineers and ETL Specialists

- Data Annotators for structured and unstructured content

- Model Validation and Repository Review Contributors

- Agentic Workflow Trainers and System Testers

- Program Managers for AI Delivery Pipelines

A reliable partner maintains these roles in ongoing readiness, enabling clients to scale with minimal friction.

But capability is not limited to supplying roles. A true AI workforce partner also establishes:

- Standardized knowledge baselines

- Domain-specific onboarding tracks

- Consistent documentation and job aids

- Multi-layer quality systems

- Review pipelines

- Delivery governance

- Workforce continuity plans

- Rapid replacement processes

These components function together to ensure that teams not only join quickly, but also perform at consistent quality levels even when workloads expand significantly.

Featured Blog Post: How AI Companies Scale LLM Teams Fast Without Hiring

Why do so many AI companies struggle to select the right workforce partner?

Most companies evaluate vendors through the lens of traditional staffing metrics: CV quality, recruitment timelines, and hourly rates. While these metrics matter, they are only a small part of the picture.

AI workflows are far more sensitive to detail. They require partners who understand instruction following, LLM scoring rules, annotation guidelines, chain-of-thought restrictions, domain-specific review formats, and the nuances of model behavior.

A partner who simply forwards CVs cannot succeed in a world where:

- Guidelines evolve continuously

- Quality thresholds must be enforced strictly

- Evaluations must be consistent across hundreds of contributors

- Replacement cycles must be instant

- Communication between internal and external teams must be seamless

- Contributors must understand model behavior, hallucination patterns, and prompt response structures

Most workforce providers are not designed for this level of operational specificity. The inability to differentiate between a typical vendor and an AI-specialized delivery partner is what causes delays, quality breakdowns, and project inefficiencies.

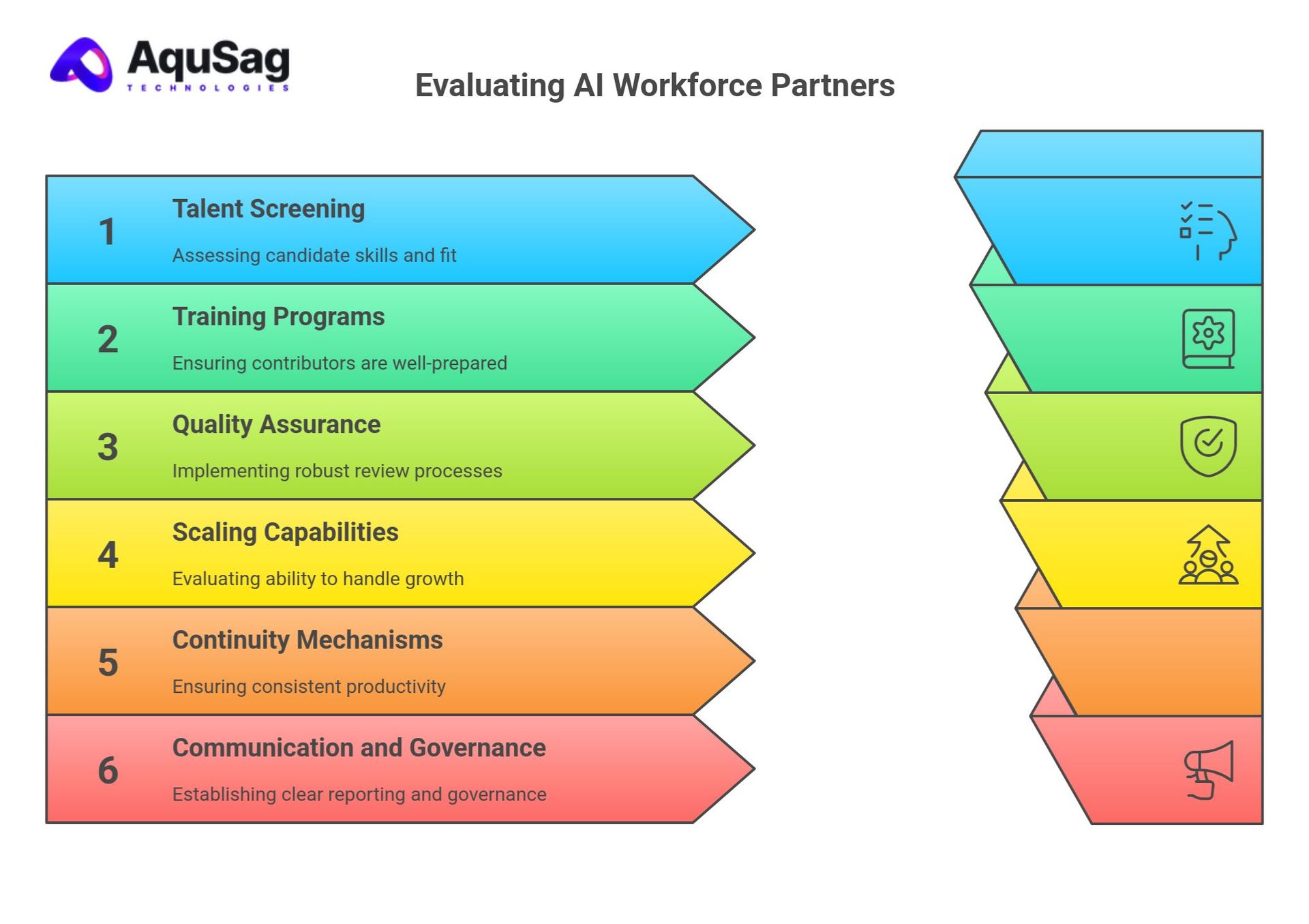

What questions should companies ask before choosing an AI workforce partner?

A company evaluating a workforce partner must begin by understanding how the partner actually manages talent behind the scenes. The right partner will have clear, transparent answers to questions such as:

How do you screen AI talent?

LLM workflows require contributors who can follow instructions precisely, understand context, and maintain consistency across tasks. Screening should include technical reasoning, linguistic capability, domain specialization, and an understanding of AI behavior.

How do you train contributors before deployment?

Training is not optional. A partner must have structured onboarding tracks for roles like LLM trainers, evaluators, annotators, and Python developers for AI workloads.

What quality assurance systems do you use?

Quality is the heart of LLM training. Companies must ask about the partner’s review layers, scoring methods, escalation processes, and performance dashboards.

How do you handle scaling?

Scaling 5 contributors is easy. Scaling 50 is a different challenge. Scaling 100+ requires a mature operational engine.

How do you ensure continuity and prevent productivity drop during transitions?

Stagnant productivity is one of the biggest risks in AI delivery. A partner must have continuity mechanisms and replacement cycles ready at all times.

How do communication, reporting, and governance work?

A reliable partner behaves like an extension of the client’s internal team. This includes daily communication flows, performance reporting, deliverable reviews, and escalation paths.

By asking these questions, companies quickly differentiate between basic staffing vendors and true AI delivery partners.

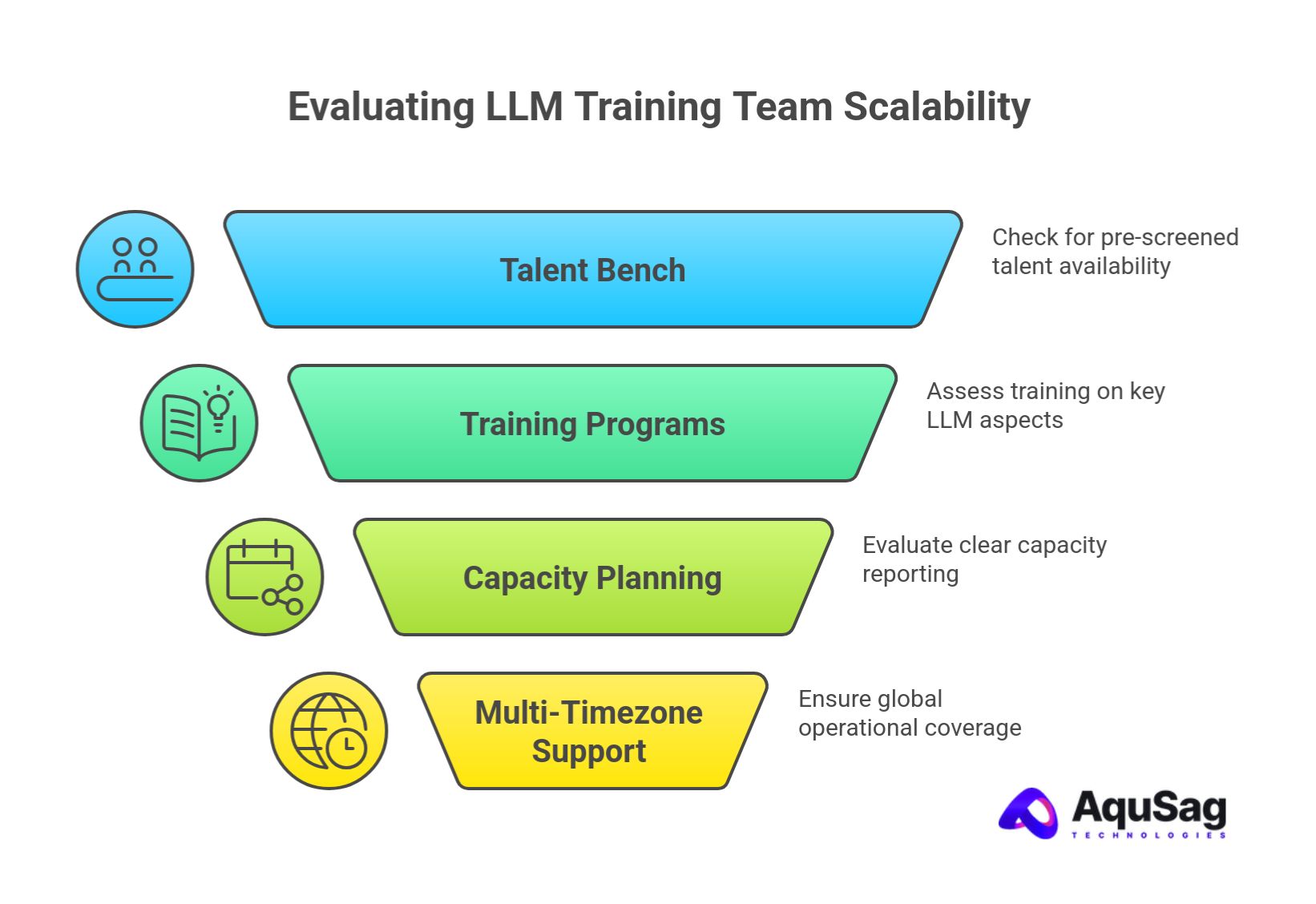

How can companies evaluate the partner’s ability to scale LLM training teams?

Scaling LLM teams requires more than having people available. It requires the infrastructure to mobilize contributors quickly, align them with guidelines, and maintain performance through governance.

To evaluate this, companies should assess:

First, whether the partner maintains a bench of pre-screened talent across roles such as LLM Trainers and RLHF Contributors. A partner who recruits from scratch for every requirement cannot meet scaling timelines.

Second, whether the partner trains contributors on instruction formats, evaluation rules, domain constraints, safety frameworks, and hallucination detection. Trained contributors deliver value instantly.

Third, whether the partner has clear capacity planning. AI companies should expect daily reporting with metrics like active headcount, readiness pipeline, and projected availability.

Fourth, whether the partner can support multi-timezone delivery. Global operations require follow-the-sun coverage.

By analyzing these components, AI companies understand the partner’s true operational strength.

Why does quality become the deciding factor when evaluating partners?

LLM training and evaluation depend entirely on consistency. A single contributor producing out-of-spec outputs can affect entire model batches. The effects are visible immediately: models drift, training quality drops, and evaluation cycles produce noise.

A mature partner ensures quality through:

- Structured onboarding

- Standardized instructions

- Reviewer teams

- Continuous feedback loops

- Corrective coaching

- Scorecards

- Productivity and accuracy dashboards

- Controlled replacement cycles

This discipline does not exist in general staffing vendors. AI companies therefore need partners who view quality not as a metric, but as an operational philosophy.

The best partners think like AI product teams. They understand the stakes of each output and build systems that reflect that responsibility.

Reinforcement Learning with Human Feedback (RLHF) plays a critical role in improving model accuracy, alignment, and usability. However, successful RLHF implementation requires clear workflows, trained contributors, and strong quality governance. For a comprehensive overview of RLHF operations, staffing considerations, and execution best practices, refer to The Complete Guide to RLHF for Modern LLMs: Workflows, Staffing, and Best Practices

What does strong communication between an AI company and its workforce partner look like?

Communication is one of the strongest predictors of success in AI workforce partnerships. A high-performing relationship is not transactional. It is co-managed, coordinated, and jointly optimized.

Daily communication ensures clarity on volumes, priority tasks, guideline changes, model version updates, evaluation methodology shifts, and expected quality thresholds. Mature partners adapt quickly and relay these changes across cohorts instantly.

Equally important is transparency. A partner must proactively identify risks, flag performance dips, forecast supply needs, and suggest process improvements. The goal is not only to provide workforce capacity but to ensure operational stability across the entire AI workflow.

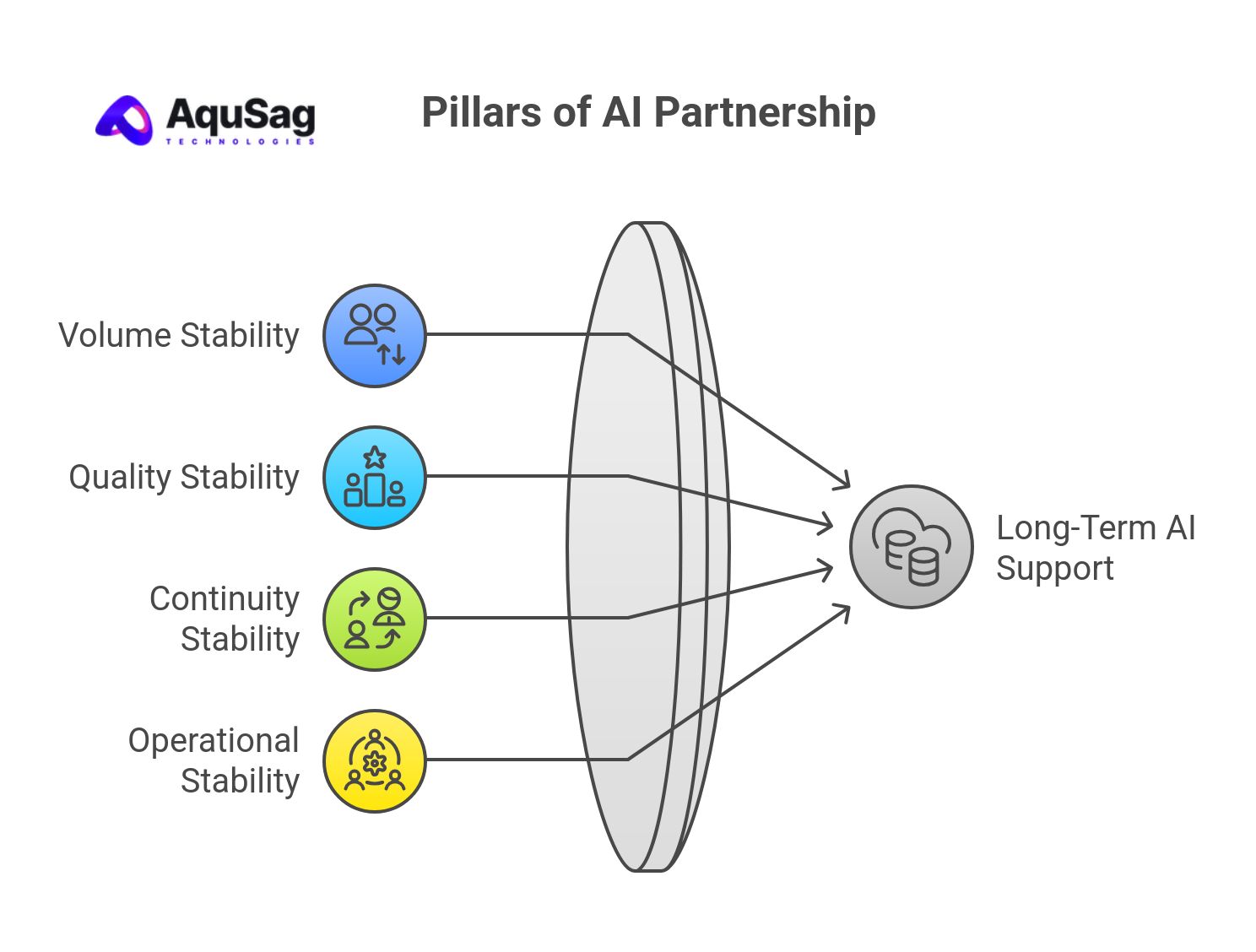

How should companies judge whether a partner can support long-term AI programs?

The strongest partners demonstrate stability across multiple dimensions:

Volume stability

They can support increasing headcount over months without degradation.

Quality stability

Their contributors consistently meet or exceed performance requirements.

Continuity stability

Replacements, transitions, and training cycles happen smoothly.

Operational stability

Documentation, communication, and governance remain uniform across all projects.

These attributes indicate the partner is not only capable of supporting short-term spikes but can also sustain long-term AI operations such as continuous LLM evaluation, ongoing data annotation, or evolving RLHF pipelines.

Featured Blog Post: Build RLHF, LLM & GenAI Pipelines Without Internal AI Teams

Why is domain specialization becoming important in selecting AI workforce partners?

As AI adoption expands across industries, domain expertise is becoming essential.

A partner must be able to supply contributors familiar with finance, healthcare, retail, logistics, consumer software, cybersecurity, or legal processes depending on the project. Domain-specific annotation, evaluation, and instruction tuning yield significantly higher-quality outputs.

A partner that supports multi-domain AI workloads becomes valuable for companies building models that require nuanced understanding of specialized subjects.

What is the right way to start with an AI workforce partner?

Most successful engagements begin with a structured onboarding plan.

Companies typically start with a small pilot team, clarify workflows, and refine guidelines before scaling to larger cohorts. Once roles, expectations, and evaluation methods are aligned, scaling becomes predictable and controlled.

This phased approach ensures that both sides operate with precision and transparency, reducing the risk of misalignment as the engagement grows.

As AI programs mature, organizations must scale LLM training and RLHF operations without disrupting product timelines. Without the right delivery structure, expanded AI workloads can introduce bottlenecks and slow innovation. This balance between scale and execution speed is addressed in How to Scale LLM Training and RLHF Operations Without Slowing Down Product Delivery, which explains how teams can maintain momentum while increasing AI output.

How should AI companies approach partner selection in the future?

The more AI evolves, the more vital flexible workforce models become.

Companies cannot rely on traditional staffing or internal hiring cycles to support dynamic workloads. They need partners who understand AI delivery at a deep operational level, who maintain trained talent pools, who build systems for quality and scale, and who align their infrastructure with the client’s product goals.

A strong AI workforce partner becomes an extension of the internal team.

They bring agility, stability, and operational expertise to environments where internal structures cannot move fast enough. Selecting the right partner therefore becomes a strategic decision,one that affects velocity, quality, and long-term competitiveness.

An effective AI workforce partner must deliver more than skilled contributors—they must enable repeatable, end-to-end delivery pipelines. Enterprises increasingly require structured support for RLHF workflows, LLM evaluation, and GenAI deployment without building highly specialized internal teams. A practical framework for achieving this is discussed in How Can Enterprises Build RLHF, LLM, and GenAI Delivery Pipelines Without Specialized Internal Teams?, which highlights scalable execution models designed for enterprise environments.

FAQ: Choosing the Right AI Workforce Partner

1. How do I know if a workforce partner is capable of supporting AI projects?

Look for structured training, readiness pipelines, quality systems, and the ability to deploy specialized roles like LLM Trainers, RLHF Contributors, and Evaluation Specialists.

2. What roles can be scaled through an AI workforce partner?

LLM Trainers, Evaluators, RLHF Annotators, Python Engineers for AI, Data Annotators, Data Engineers, Backend Developers, Full-Stack Engineers, and domain-specialized contributors.

3. What separates an AI-focused partner from a traditional staffing vendor?

AI-focused partners provide trained cohorts, multi-layer QA systems, domain alignment, operational governance, and the ability to scale rapidly.

4. How fast can an AI workforce partner scale?

Mature partners can activate teams within days and scale to dozens or hundreds of contributors in under a week.

5. What is the biggest risk of choosing the wrong partner?

Inconsistent quality, slow scaling, delivery breakdowns, and model degradation due to poor evaluation or annotation standards.